Amazon Alexa users given false information attributed to Full Fact’s fact checks

Amazon’s interactive voice assistant Alexa has given users incorrect information on topics ranging from MPs’ expenses to the origins of the Northern Lights, apparently repeating false and misleading claims which have been the subject of Full Fact fact checks.

The issue—which Amazon has said it was working to resolve and which may now be fixed—was flagged to us by a reader who got in touch earlier this week. In response to the question “Echo, were the Northern Lights recently seen worldwide a natural occurrence?”, Alexa replied: “From fullfact.org—the Northern Lights seen in many parts of the world recently were not a natural occurrence, but generated by the HAARP facility in Alaska.”

This is not correct, as the fact check Alexa appeared to be referencing clearly said.

We tested this ourselves and on Tuesday found several other examples of Alexa giving out wrong information apparently based on our fact checks.

In response to different questions, it (incorrectly) said the following, in each case attributing the answer to Full Fact:

- That “Prime Minister Sir Keir Starmer has announced that the UK will be boycotting diplomatic relations with Israel”. (He hasn’t.)

- That “Members of Parliament can claim £50 for a breakfast”. (They can’t.)

- That “Mike Tyson spoke on CNBC explaining his support for Palestine and encouraging a boycott of Israel”. (There’s no evidence this is the case.)

And when we asked a question via the Alexa app, it told us “there are seven and a half million people on NHS waiting lists” (which is not correct according to NHS England data), adding: “Learn more on fullfact.org”.

This didn’t happen with every claim we checked. For example, when asked whether Nurofen tablets contain graphene oxide, Alexa correctly quoted our recent fact check, saying: “From fullfact.org—Graphene oxide is not an ingredient in Nurofen tablets.” It’s also not clear if incorrect information was shown to all Alexa users or only a subset, how many wrong answers were given and for how long it has been a problem.

On Wednesday, an Amazon spokesperson told us: “These answers are incorrect and we are working to resolve this issue.”

While we can’t be certain, this may now have happened, as when we checked later on Wednesday we were unable to replicate the incorrect responses listed above. But we have contacted Amazon again to try and understand what went wrong, when the problem started and how they plan to ensure it doesn’t reoccur.

Honesty in public debate matters

You can help us take action – and get our regular free email

Why did this happen?

This is clearly a big problem. Not only were people looking for trusted information given incorrect answers, they were also told that Full Fact was the source of these incorrect answers—which might make the false claims seem more legitimate, or undermine people’s trust in fact checkers more generally.

Without knowing exactly how Amazon uses the internet to find answers to users’ questions to Alexa, we can’t say for sure why this happened. Amazon hasn’t given us an explanation of what went wrong.

However, the examples given above suggest that when Alexa was attempting to answer a user's question about something we had fact checked, it read the “claim” part of our check, and mistakenly reported that as the answer to the related question.

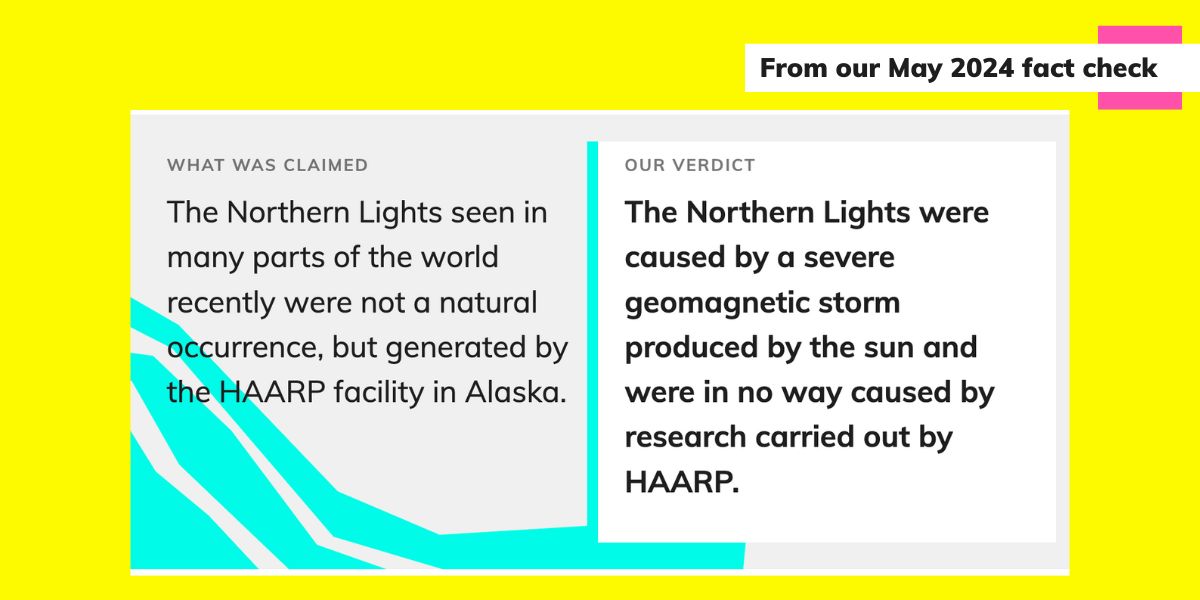

Almost all of our fact checks have two boxes at the very top, showing a brief explanation of the claim being fact checked, and then a short summary of our verdict. Here’s an example from our Northern Lights fact check:

Our fact check clearly states that the Northern Lights were not generated by HAARP (which stands for High-frequency Active Auroral Research Program and is a research facility based in Alaska). But reading only the claim in isolation would give a very different impression.

We use the commonly-followed ClaimReview web standard as a way to tag and identify the claims and verdicts in our fact checks, to make it as easy as possible for social media and search engine companies to recognise the information we publish.

What about other virtual assistants?

While we haven’t conducted an in-depth investigation into this, we have done a few spot checks on two other virtual assistants—Google Assistant and Apple’s Siri.

Neither gave inaccurate answers to the questions which caused problems for Alexa, but on Tuesday we did identify one misleading answer from Google Assistant when we specifically asked it for information from Full Fact.

We asked a smart speaker with Google Assistant installed: “OK Google, according to fullfact.org were the Northern Lights that were recently seen worldwide a natural occurrence?” It told us: “On the website fullfact.org, they say: 'The Northern Lights seen in many parts of the world recently were not a natural occurrence, but generated by the HAARP facility in Alaska’.”As covered above, this is misleading because the fact check quoted only uses these words to summarise a claim which we go on to say is inaccurate.

We’ve asked Google about this, and will update this article if we receive a response.

Full disclosure: Full Fact has received funding from Google and Google.org, Google’s charitable foundation. We disclose all funding we receive over £5,000; you can see these figures here. We are editorially independent and our funders have no editorial control over our content.